On This Page

Mon 17 Jan 2022

Debugging Spark Application Locally using remote container

Tags: spark pyspark docker vscode

One of the nifty feature in any code development is the ability to debug your application using break points. Submitting a Spark job while waiting for it to complete will waste a lot of time debugging. Spark jobs can be debugging with break points and step over and step into and step out commands.

Requirements

Setup

Lets create our project.

$ mkdir SparkDemo && cd SparkDemo

We will create a file .devcontainer/devcontainer.json. VS Code will use this file to access (or create) a development container with a well-defined tool and runtime stack.

$ mkdir .devcontainer

$ touch .devcontainer/devcontainer.json

devconainter.json will look like this:

| { | |

| "name": "Dockerfile", | |

| // Sets the run context to one level up instead of the .devcontainer folder. | |

| "context": "../", | |

| // Update the 'dockerFile' property if you aren't using the standard 'Dockerfile' filename. | |

| "dockerFile": "../Dockerfile", | |

| "extensions": ["ms-python.python"], | |

| // Set *default* container specific settings.json values on container create. | |

| "settings": { | |

| "terminal.integrated.shell.linux": null | |

| }, | |

| // Add the IDs of extensions you want installed when the container is created. | |

| } |

We will need to add a Dockerfile to our project. This file will be used to build the container.

| FROM ubuntu:20.04 | |

| ARG SPARK_VERSION=3.1.2 | |

| ARG HADOOP_VERSION=3.2 | |

| ARG MAVEN_VERSION=3.8.4 | |

| ENV MAVEN=https://dlcdn.apache.org/maven/maven-3/${MAVEN_VERSION}/binaries/apache-maven-${MAVEN_VERSION}-bin.tar.gz | |

| ENV SPARK=https://dlcdn.apache.org/spark/spark-${SPARK_VERSION}/spark-${SPARK_VERSION}-bin-hadoop${HADOOP_VERSION}.tgz | |

| # install dependencies | |

| RUN apt-get update && apt-get install -y software-properties-common gcc && \ | |

| add-apt-repository -y ppa:deadsnakes/ppa | |

| RUN apt-get update && \ | |

| apt-get install -y python3.6 python3-distutils python3-pip python3-apt tar git wget zip openjdk-8-jdk | |

| RUN ln -s /usr/bin/python3 /usr/bin/python | |

| WORKDIR /project | |

| # download | |

| RUN wget $SPARK | |

| RUN wget $MAVEN | |

| # extract | |

| RUN tar zxfv apache-maven-${MAVEN_VERSION}-bin.tar.gz | |

| RUN tar zxfv spark-${SPARK_VERSION}-bin-hadoop${HADOOP_VERSION}.tgz | |

| RUN rm spark-${SPARK_VERSION}-bin-hadoop${HADOOP_VERSION}.tgz | |

| RUN rm apache-maven-${MAVEN_VERSION}-bin.tar.gz | |

| RUN mv spark-${SPARK_VERSION}-bin-hadoop${HADOOP_VERSION} spark | |

| RUN mv apache-maven-${MAVEN_VERSION} maven | |

| ENV SPARK_HOME /project/spark | |

| ENV MAVEN_HOME /project/maven | |

| ENV JAVA_HOME /usr/lib/jvm/java-8-openjdk-amd64/jre | |

| ENV PATH $PATH:$MAVEN_HOME/bin:$SPARK_HOME/bin:$JAVA_HOME/bin | |

| RUN echo "export SPARK_CONF_DIR=$GLUE_HOME/conf" >> /root/.bashrc | |

| RUN echo "export PYTHONPATH=\$PYTHONPATH:\$GLUE_HOME" >> ~/.bash_profile | |

| RUN cd $SPARK_HOME/python/ && python setup.py sdist && pip install dist/*.tar.gz | |

| CMD ["bash"] |

Lastly, we need to create a simple Pyspark script.

| from pyspark.sql import SparkSession | |

| spark = SparkSession.builder.getOrCreate() | |

| customers = spark.createDataFrame( | |

| [ | |

| (1, "customer_1"), # create your data here, be consistent in the types. | |

| (2, "customer_2"), | |

| (3, "customer_3"), | |

| ], | |

| ["id", "login"] # add your column names here | |

| ) | |

| customers.show() | |

| orders = spark.createDataFrame( | |

| [ | |

| (1, 1, 50), | |

| (2, 2, 10), | |

| (3, 2, 10), | |

| (5, 1000, 19) | |

| ], | |

| ["id", "customer_id", "login"] # add your column names here | |

| ) | |

| order_customer = orders.join(customers, orders["customer_id"] == customers["id"], "inner") | |

| order_customer.show() |

Running the container

Currently your project structure should look like this:

SparkDemo

___ .devcontainer

___ ___ devcontainer.json

___ Dockerfile

___ spark_demo.py

Next, we need to open the SparkDemo in VS Code.

$ cd SparkDemo

$ code .

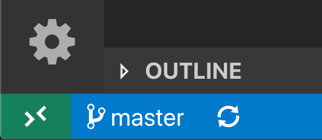

To run the remote-container, you can click on the green button in the bottom left corner of the VS Code window.